What is data quality or the quality of the data

Data quality encompasses a set of rules that ensure a minimum quality of the data that has been received to help ensure that the extracted results are reliable and of quality.

Today millions and millions of data are generated every second. Many of them are of poor quality and will only contribute errors to our analysis. That is why any company must have minimum quality in the data it processes and analyses.

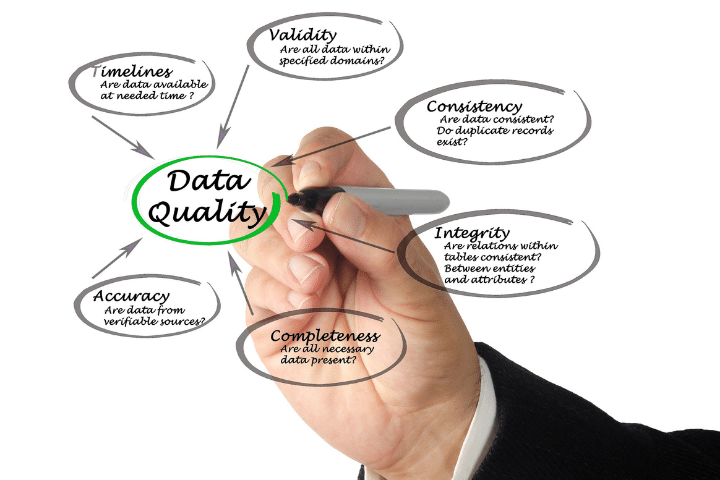

Data quality characteristics

There are certain characteristics or rules that define the quality of the input data. Below we give a brief explanation of each of them.

Integrity

Integrity refers to the ability to maintain the correctness and completeness of information in a database. During data ingestion or updating processes, integrity may be lost due to the addition of incorrect information.

For this reason, it is vital to comply with this characteristic to maintain good data quality and to be able to trust the analysis we perform on it.

Accuracy

Accuracy is a data quality property that refers to how precise the stored values are and that they do not give rise to ambiguities.

Reliability

This property is directly related to the previous two and refers to the extent to which we can trust the data we have stored.

To measure reliability we can use a set of standards and rules that determine the degree of reliability and we can discard unreliable values before saving them in the data warehouse.

Importance of data quality

Big data and artificial intelligence have reached many sectors relevant to society such as health, the economy or the army. Statistical models are built from a large amount of data.

It is important that the quality of the data is as similar as possible to reality. Many times the data contains errors and is not completely accurate. It is for this reason that it is important to have certain rules that eliminate inaccurate data and only select those that meet them. This is where “data quality” comes in.

It is highly recommended to review all the rules used to determine the quality of the information and hold periodic meetings to determine if the data from the last few months needs new cleaning rules or not.

Data transformations

In many cases, the data we have is correct. However, they have to be transformed so that they fit well with what machine learning models require.

A fundamental step in creating statistical models is the standardization of information. This normalization is carried out so that all model features have values within the same range of numbers.

On certain occasions it may be important to scale the input data so that it follows a Gaussian distribution, since it is the one that best adapts to certain machine learning techniques.

Other options are, for example, the linear combination of features or dimensionality reduction with mathematical techniques such as PCA (Principal Component Analysis).

Later we will do an article explaining the different transformations available and how we can apply them to our data using the scikit-learn Python library.